Cursor is Hiring an Analytics Engineer

What skills and experience do you need to work for one of the hottest AI software companies?

As someone who recently went through the job search process, I know how exhausting and difficult it can be. The worst part was spending so much time interviewing with companies that didn’t align with what I wanted in my career.

So, when I find a company doing cool things that has an available analytics engineering role, I want to share it with you!

If you aren’t familiar with Cursor, it is an AI-enabled code editor built on top of VSCode. It builds AI directly into your coding workflow, helping you develop faster and more accurately.

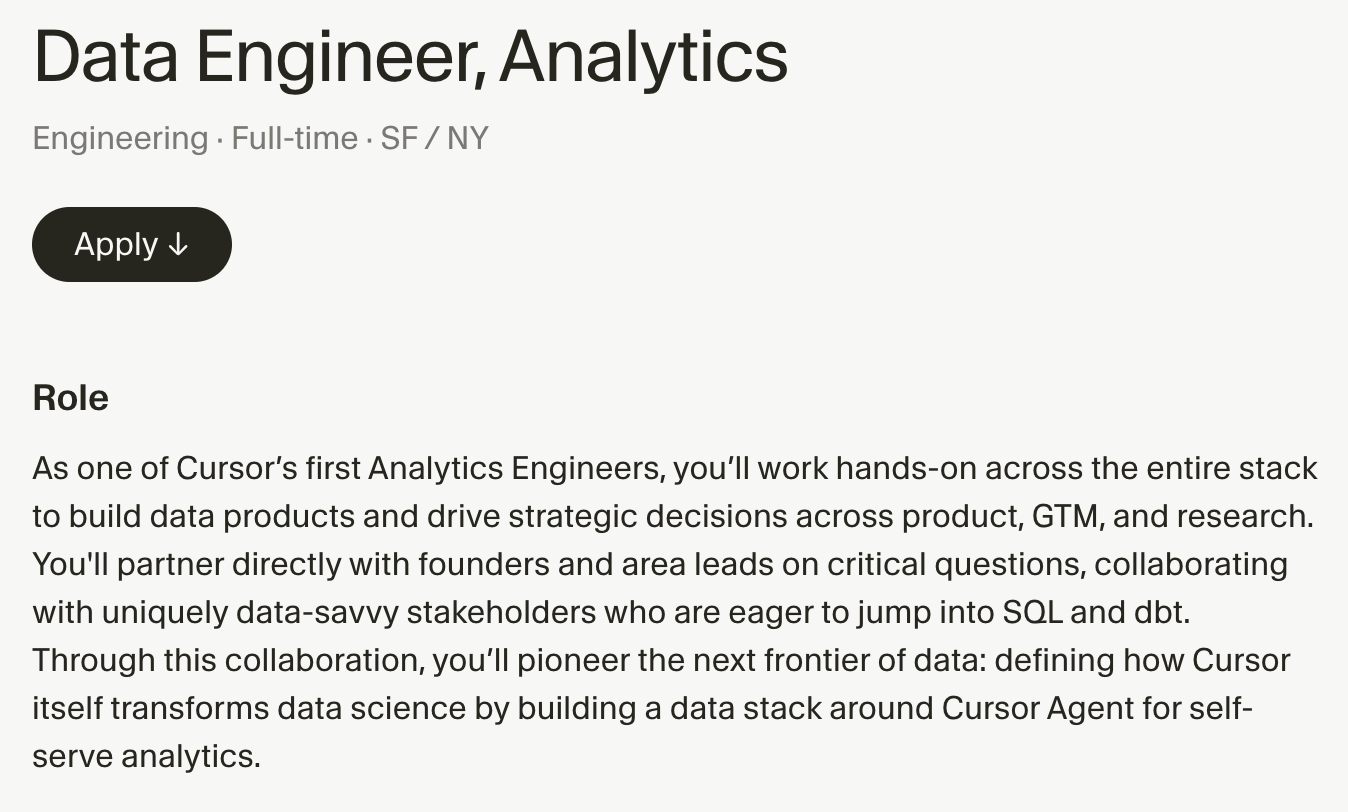

Cursor recently shared that they are hiring a Data Engineer, Analytics.

Let’s walk through these requirements and what exactly they are looking for!

Whether you are applying to new roles now or looking to upskill as an analytics engineer, you can use these requirements to guide your own learning and development.

Evaluate new tooling and AI integrations.

Knowing how to choose the tool that is right for your company, team, and use case is surprisingly difficult. It’s easy to get swept away in the hype of what everyone else is doing, or the fancy bells and whistles. When evaluating a tool, you need to think about the factors that are most important to your specific problem.

I like to think about the following:

How much does it cost?

Who on my team will be owning this? What’s the learning curve? How many resources does it take to manage this tool?

Are there any roadblocks I can foresee with implementing it? Will other teams be receptive to this new tool?

How well does it integrate with what we’ve already built?

Does the tool offer the support I need?

Can I grow and scale to where I want to be in 3-5 years with this tool?

It’s really important to understand all of these aspects before jumping into any kind of contract. You need to make sure your team has the capacity to manage a new tool (including the work of migration and maintenance), the tool will be well-accepted by the other teams interacting with it, and it fits with your long-term vision.

You don’t want to say yes to something that will need to be replaced a year or two from now.

Write SQL and Python in your sleep.

This is a given for any analytics engineer, at least with SQL. If you have the time, I always recommend learning another programming language, as well, as it gives you more flexibility in what you can build outside of analytics.

Python has many applications, especially in analytics engineering. You can use it to:

build data pipelines, including data ingestion and orchestration

enable your work with modern orchestration tools like Airflow, Prefect, and Dagster

create scripts to clean and compare data sources

Python is something I learned a few years ago and am able to easily pick back up again for different projects, even if I haven’t used it in months. AI makes it even easier to support old forgotten skills and use them to build something impactful.

Take the time to learn the basics of something like Python once, and it will only make everything else easier in the age of AI.

Optimize queries for speed and cost on datasets that grow by billions of rows per day.

I can only imagine all of the data that Cursor users generate on a daily basis. Between the different lines of code written and how AI is working behind the scenes to problem-solve, you will most likely deal with a lot of messy data.

There are many different ways you can optimize your queries for speed, some of which include:

indexing your tables (clustered vs non-clustered indexes)

reading through explain plans and understanding where the bottlenecks are

building models incrementally (with tools like dbt or SQLMesh)

I recommend starting here for understanding different techniques for dealing with large volumes of data, including scanning and optimization.